C-Transformer: Homogeneous DNN-Transformer/Spiking-Transformer Process…

본문

Overview

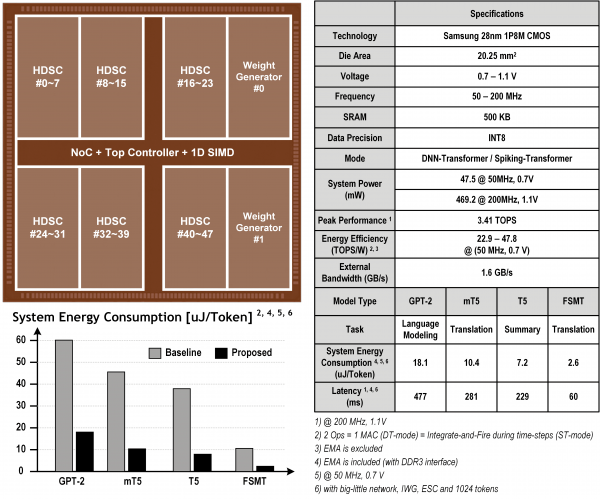

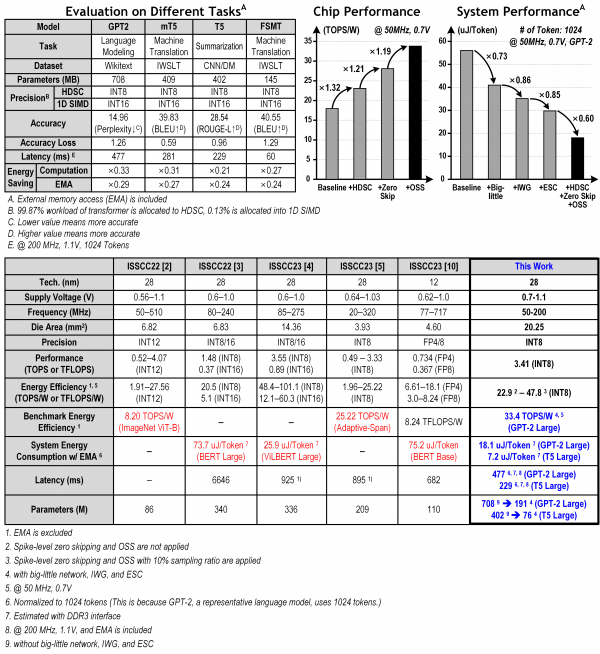

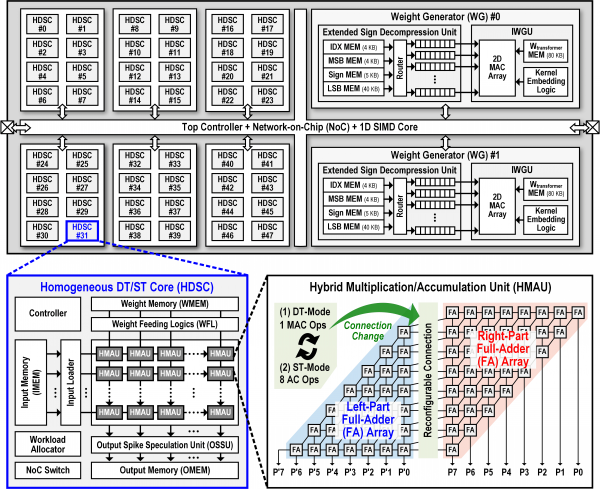

In this article, we propose the C-Transformer with the big-little network, and implicit weight generation (IWG) to solve the external memory bottleneck of large language models. It has 3 feature functional blocks: 1) Homogeneous DNN-Transformer/Spiking-transformer core with hybrid multiplication/accumulation unit to increase HW utilization; 2) Output spike speculation unit to increase the energy efficiency of spike domain processing; 3) IWG unit with extended sign compression to eliminate external memory bottleneck. The chip operates at 0.7-1.1V supply voltage with a maximum frequency of 200MHz. It supports various tasks such as language modeling, translation, and summarization. For GPT-2, mT5, T5, and FSMT, the C-Transformer shows 0.21-0.33× computation energy and 0.24-0.29× EMA energy compared to the baseline. Our chip shows 30.2% lower energy consumption than the previous state-of-the-art even though our model has 2.1× parameters. Moreover, it consumes 72.2% less energy under a similar parameter size. The C-Transformer can complete various LLM tasks within <0.5s latency, especially FSMT in 0.06s and GPT-2 in 0.477s. The C-Transformer combines DNN-transformer and spiking-transformer to increase the energy efficiency of computation without EMA bottleneck and enables LLM such as GPT-2 on mobile devices to achieve state-of-the-art system performance.

Features

- Homogeneous DNN-Transformer/Spiking-transformer core

- Output spike speculation

- 3-stage weight compression

(Big-little network, implicit weight generation, extended sign compression)

Related Papers

- ISSCC 2024