SNPU: Ultralow-power Spike Domain Deep-Neural-Network Processor

본문

Overview

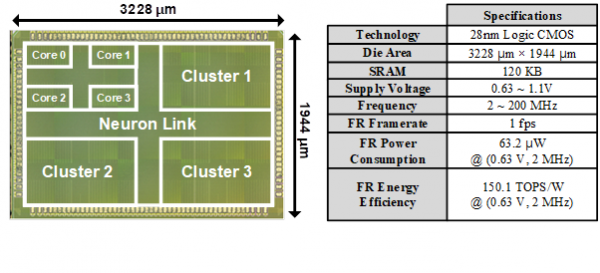

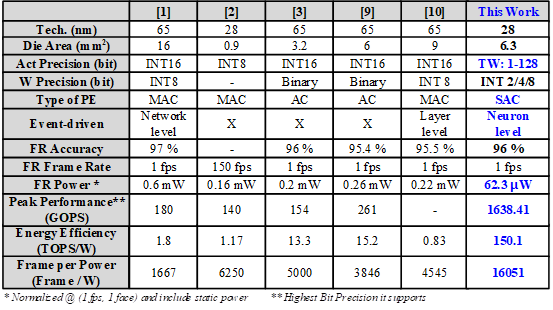

In this paper, we propose SNPU, a spike-domain DNN accelerator, to achieve ultralow power consumption with neuron-level event-driven inference. The spike domain processing can replace many MACs with small number of accumulations. The proposed SNPU has 3 key features; spike train decomposition to reduce the accumulations, time shrinking multi-level encoding to replace the multiple accumulations with single shift-and-accumulation which adopts bit scalability to enable different always-on applications, and neuron link to support various time-windows for optimal energy consumption by minimizing time-window in layer-by-layer and to increase the PE utilization. For face recognition with the LFW dataset, the proposed spike domain processing can reduce the energy consumption by 43.9% with less than 1% accuracy loss. If there is no face in the input image, the energy consumption can be reduced further by 87.6%. The SNPU which is fabricated in 28-nm CMOS technology. It occupies 6.3 mm2 die area with 120 KB on-chip SRAM, and can operate from 2 MHz to 200 MHz clock frequencies with 0.63 V to 1.1 V supply voltages. And it achieves energy efficiency of 150.1 TOPS/W during face recognition. Original DNN before the spike domain conversion is composed of 5 convolutional layers and 1 fully connected layer with 326 KB parameters. The shift-and-accumulations of each layer in SNPU is only ~25% of MAC operations in the original CNN. The SNPU shows 96% accuracy of LFW dataset, and its latency is only 0.681 ms. Also, the maximum throughput is 147K fps, and the peak performance is 15.9 TOPS. In conclusion, a 63.2 μW ultralow-power spike domain DNN processor with spike train decomposition, time shrinking multi-level encoding and neuron link is proposed.

Features

- spike train decomposition to reduce the accumulations

- time shrinking multi-level encoding to replace the multiple accumulations with single shift-and-accumulation which adopts bit scalability to enable different always-on applications

- neuron link to support various time-windows for optimal energy consumption by minimizing time-window in layer-by-layer and to increase the PE utilization.

Related Papers

- ASSCC 2022