Neuro-CIM: Neuromorphic Computing-in-Memory Processor

본문

Overview

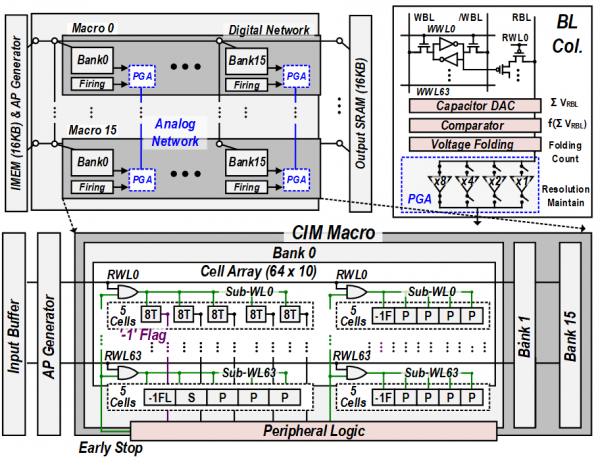

Previous CIM AI processors did not achieve high energy efficiency >100 TOPS/W because they need multiple WL driving for parallelism and many high-precision ADCs for high accuracy. Some CIMs heavily exploited weight data sparsity to obtain high energy efficiency, but their sparsity was limited to <30% in practical cases (e.g. ImageNet task with ResNet-18) to secure <1% accuracy loss. In this paper, we propose the Neuro-CIM which uses neuromorphic principles to increase energy efficiency >100 TOPS/W and chieve <1% accuracy loss without the high-precision ADCs regardless of the data sparsity. It converts input data into the Action Potential (AP) spike train by spike encoding, and the APs are weighted and integrated to generate Membrane Potential (VMEM) during a predefined time-window. The propose Neuro-CIM achieves 62.1-310.4 TOPS/W energy efficiency across 1b-8b weights with four key features: 1) MSB word skipping (MWS) to reduce the BL activity and power consumption of SRAM by 25-38% across 4b-8b weight; 2) Early Stopping (ES) to terminate the VMEM integration a priori when the neuron is speculated not to fire leading to 31% improved power efficiency; 3) Mixed-mode firing to accelerate not only a single-macro SNN computation but also multi-macro aggregation; 4) Voltage Folding scheme to extend 3x VMEM dynamic range without concession of its resolution benefiting from residue-amlification while using only 1b dynamic comparators. The Neuro-CIM outperforms state-of-the-art CIM processors in terms of energy efficiency by 4.09x.

Features

- MSB word skipping (MWS) to reduce the BL activity and power consumption of SRAM by 25-38% across 4b-8b weight

- Early stopping (ES) to terminate the VMEM integration a priori when the neuron is speculated not to fire leading to 31% improved power efficiency

- Mixed-mode firing to accelerate not only a single-macro SNN computation but also multi-macro aggregation

- Voltage folding scheme to extend 3x VMEM dynamic range without concession of its resolution benefiting from residue-amplification while using only 1b dynamic comparators

Related Papers

- S.VLSI 2022