Slim-Llama : LLM Processor with Binary/Ternary Weights for Billion-Par…

본문

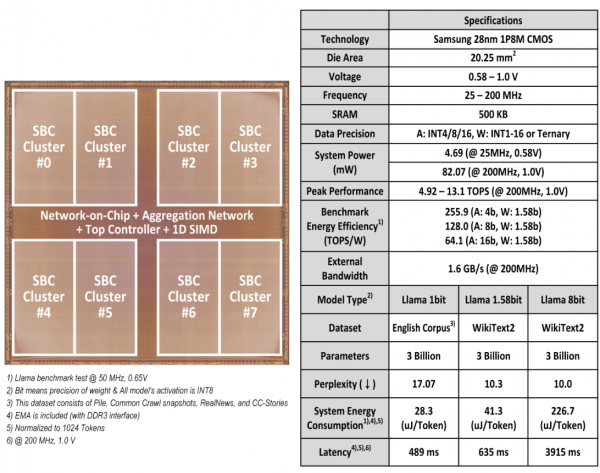

Overview

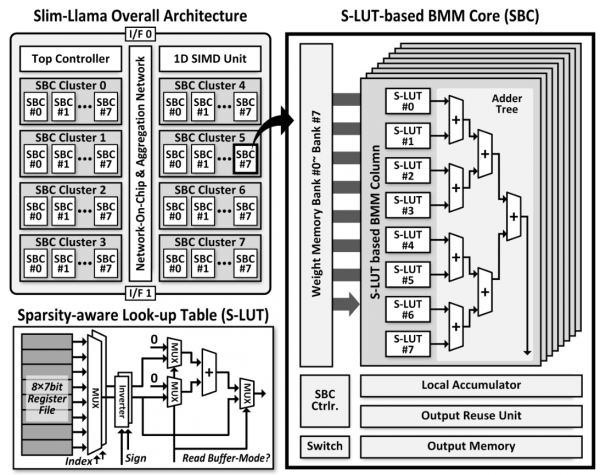

Slim-Llama is an ASIC designed to address the high energy consumption in large language models due to external memory access. By using binary/ternary quantization and integrating a Sparsity-aware Look-up Table, Slim-Llama improves energy efficiency significantly. Output reuse scheme and index vector reordering enhance performance, achieving up to 4.59× better benchmark energy efficiency than previous state-of-the-art. It is the first ASIC to efficiently run billion-parameter Llama with 4.69mW.

Features

- Output Reuse in S-LUT based BMM Core (SBC)

- Dual mode (LUT-mode & Buffer-mode) of sparsity-aware look-up table (S-LUT) and sparsity-aware workload allocation

- Index vector reordering for bit transition reduction

Related Papers

- ISSCC 2025