OmniDRL: Energy-efficient Deep Reinforcement Learning Processor

본문

Overview

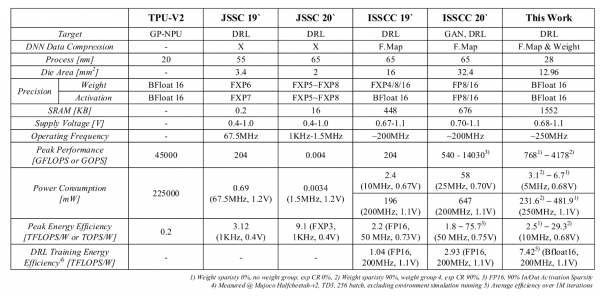

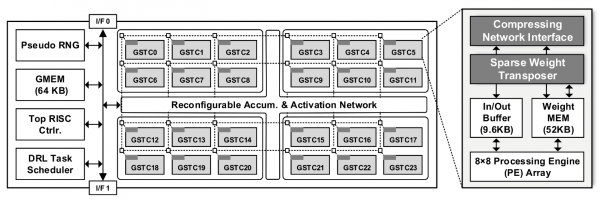

We present an energy-efficient deep reinforcement learning (DRL) processor, OmniDRL, for DRL training on edge devices. Recently, the need for DRL training is growing due to the DRL's distint characteristics that can be adapted to each user. However, a massive amount of external and internal memory access limits the implementation of DRL training on resource-constrained platforms. OmniDRL proposes 4 key features that can reduce external memory access by compressing as much data as possible, and can reduce internal memory access by directly processing compressed data. A group-sparse training enables a high weight compression ratio for every DRL iteration. A group-sparse training core is proposed to fully take advantage of compressed weight from GST. An exponent mean delta encoding additionally compresses exponent of both weight and feature map. A world-first on-chip sparse-weight-transposer enables the DRL training process of compressed weight without off-chip transposer. As a result, OmniDRL is fabricated in 28 nm CMOS technology and occupies a 3.6x3.6 mm2 die area. It achieved 7.42 TFLOPS/W energy efficiency for training robot agent (Mujoco Halfcheetah, TD3), which is 2.4x higher than the previous state-of-the-art.

Features

- Group-sparse training

- Group-sparse training core

- Exponent-mean delta encoding

- Sparse weight transposer

Related Papers

- S.VLSI 2021

- HOTCHIPS 2021

- AICAS 2021

- AICAS 2022