K-GLASS II

본문

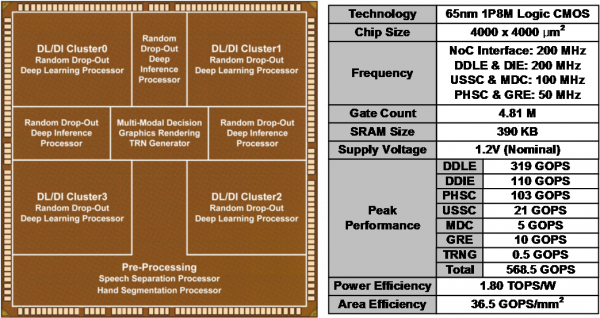

Overview

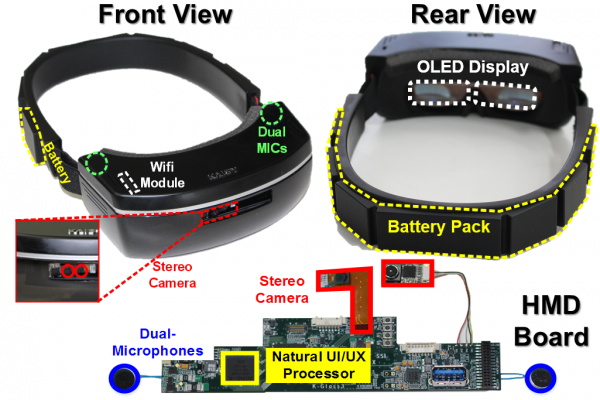

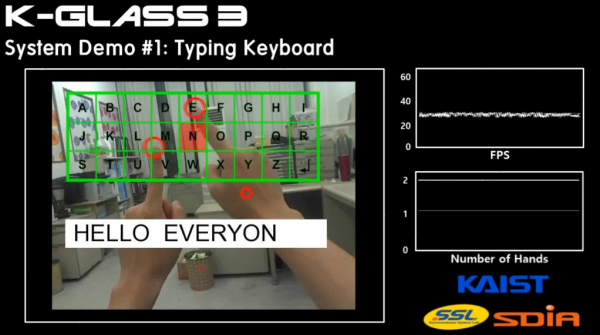

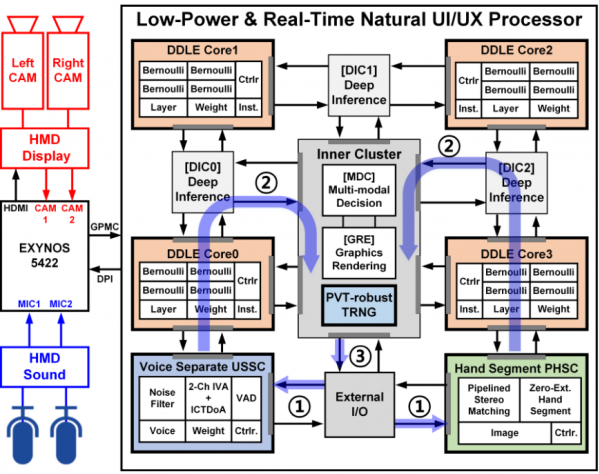

Wearable head-mounted display (HMD) smart devices are emerging as a smartphone substitute due to their ease of use and suitability for advanced applications, such as gaming and augmented reality (AR). Most current HMD systems suffer from: 1) a lack of rich user interfaces, 2) short battery life, and 3) heavy weight. Although current HMDs (e.g. Google Glass) use a touch panel and voice commands as the interface, such interfaces are solely smartphone extensions and are not optimized for HMD. Recently, gaze was proposed for an HMD user interface, but gaze cannot realize a natural user interface and experience (UI/UX), due to its limited interactivity and lengthy gaze-calibration time (several minutes). In this paper, gesture and speech recognition are proposed as natural UI/UX, based on: 1) speech pre-processing: 2-channel ICA (independent component analysis), speech selection, and noise cancellation and 2) gesture pre-processing: depth/color-map generation, hand detection, hand segmentation, and noise cancellation. This paper presents a lowpower natural UI/UX processor with an embedded deep-learning core (NINEX) to provide wearable AR for HMD users without calibration. Moreover, it provides higher recognition accuracy than previous work.

Features

- 5-stage Pipelined Hand Segmentation Core (PHSC)

- User’s Voice Activated Speech Separation Core (USSC)

- Dropout Deep Learning Engine (DDLE)

- True Random Number Generator (TRNG)

Related Papers

- ISSCC 2016 [pdf]